Algorithms do (not) know prejudices

From: Kerstin Prothmann

Once upon a time there was the idea of anonymous applications (Weber, 2014). Recruiters and managers should not be blinded by age, beauty, migration background or gender but decide objectively based on education and working experience (Gros, 2012). Would it not be great, if Mehmet was no longer assumed to have insufficient language skills just because of his name or Hans-Jürgen to be constantly sick because of his age? Of course, we trust women to be able to lead teams; but when it comes to the petite Silke with the long blond hair, we are in doubt?

Although the idea of anonymous applications without information about age, photo and name sounds convincing at first, it did not become a common standard. Quite the contrary, many candidates not only willingly share their private life on Facebook, but also make their career progression visible for everyone on LinkedIn and Xing.

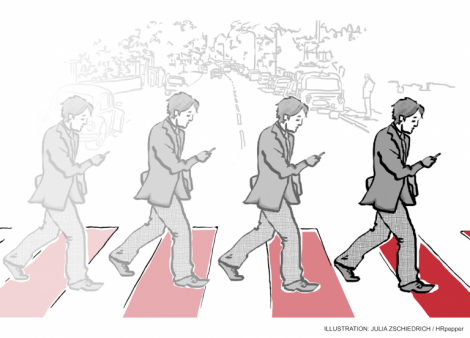

The time now seems to have come to no longer let prejudiced people make decisions about hiring and development. Computer-algorithms may assist in the choice of applicants, even though people have to make the final decision. Employee selection and development could be more oriented towards objective facts so that female applicants with migration background would not be sorted out with the first look on her photo.

How do those algorithms work, which are also known as “artificial intelligence”? The method that is applied in our case is pattern recognition. Computer programmes analyse big data, e.g. CVs, qualifications and references, and recognise certain patterns: for example, it could be that employees of the internal audit are more successful, if they previously worked in the specialist department of the company instead of being hired directly from an auditing firm. It could also be that controllers are particularly successful, if they have studied marketing or HRM as a minor subject. It could turn out that career success in marketing does not depend on the study subject. Those patterns can be used in order to forecast the suitability of people for certain jobs or to find suitable candidates for vacant positions in the talent pool. With the help of this objective approach, homophily discrimination or prejudices could be reduced. Many software providers already exist, which integrate those approaches (SAP Successfactors, Saba), the functions are often summarised by the term “Talent Analytics”.

Is the prejudiced human-being going to be replaced by meritocratic Talent Analytics-systems? Unfortunately, empirical studies indicate that they are not reliable. One study by the US-American Carnegie Mellon University revealed that advertisements for highly-paid jobs are shown less often to female applicants than male. However, if women cannot see well-paid vacancies, they are not able to apply for them. Researchers do not see the cause for this de-facto discrimination in the preferences or settings of the hiring companies or in the usual pre-configurations by Google (Spice, 2015). Instead, the algorithm, which optimises the ad placement based on (supposed) interests of the internet user and can only be seen as a Black Box by researchers, came to the decision that women have a lower probability to click on those vacancies than men. The alleged neutral artificial intelligence algorithm just reproduces the already existing social phenomenon – pattern recognition!

Similar types of discrimination by artificial intelligence algorithms were recognised in very different application areas: one software, which is popular in the judicial system in the US, evaluates the risk of criminals to be relapse based on a survey. A study of the journalist organisation ProPublica reveals that the risk score, i.e. the probability that black people relapse into crime, is highly overestimated (Angwin, 2016).

The increasing popularity of alleged intelligent algorithms, also in HRM, is associated with a series of opportunities and threats. Intelligent algorithms might help recruiters to recognise promising talent profiles efficiently and without prejudices. Nevertheless, those profiles are based on overaged patterns so that they contribute to the replication, maintenance and solidification of existing structures: if married men from elite universities were proven to be allegedly successful (according to the evaluation), the algorithm will suggest similar persons again. Those self-reproducing talent pools are not desirable, in particular not in a dynamic environment.

As helpful as those artificial intelligent methods are – they must not be used as a Black Box. At least a basic understanding of their effects and mid-results are essential for a critical application of their results.

Sources: